Getting Started

Overview

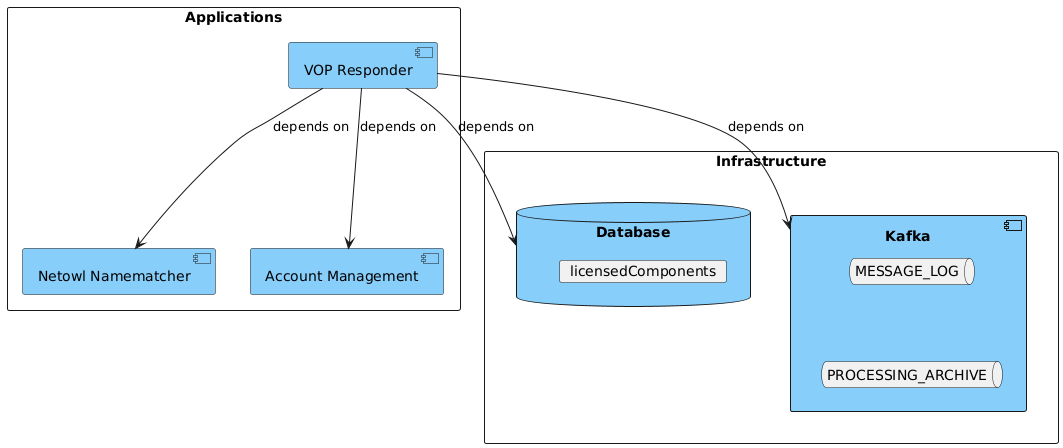

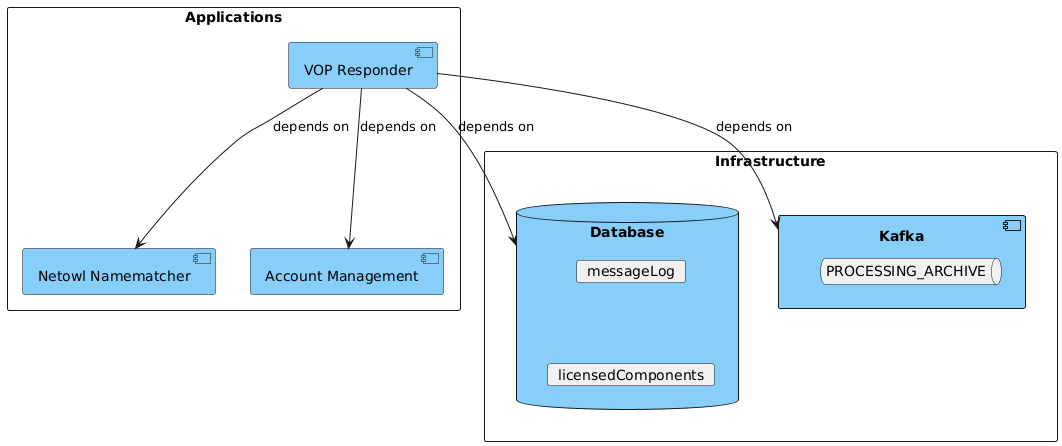

Depending on your message log configuration to use Kafka or Database, the deployment could be slightly different.

Kafka Message Logging

-

Netowl Namematcher - Used by VoP via Identity Resolution (embedded) as the third party provider for name matching.

-

Account Management - Used by VoP for looking up the account details used in matching

-

Database - Used for licensing

-

Kafka - Used by VoP for message logging and storing the processing archive

Database Message Logging

-

Netowl Namematcher - Used by VoP via Identity Resolution (embedded) as the third party provider for name matching.

-

Account Management - Used by VoP for looking up the account details used in matching

-

Database - Used for licensing and message logging

-

Kafka - Used by VoP for storing the processing archive

Configuration and Runtime

The Verification of Payee (VoP) is a stand-alone application that can be run as a service much like any other IPF application deployment. The VoP Responder service can be run as a single instance or multiple.

Configuration

The below docker compose configuration is the minimum required for the VoP Responder service to run using Kafka for message logging and MongoDB for licensing.

| VoP Responder does not require an Akka Cluster to be configured. |

ipf {

mongodb.url = "mongodb://ipf-mongo:27017/vop" (1)

}

common-kafka-client-settings {

bootstrap.servers = "kafka:9092" (2)

}

akka {

kafka {

producer {

kafka-clients = ${common-kafka-client-settings}

}

consumer {

kafka-clients = ${common-kafka-client-settings}

kafka-clients.group.id = vop-responder-consumer-group

}

}

}

ipf.verification-of-payee.responder {

name-match { (3)

thresholds = [

{

processing-entity = "default",

scorings: [

{type: "default", lowerbound = 0.80, upperbound = 0.90}

]

}

]

}

account-management.http.client {

host = account-management-sim (4)

port = 8080

}

country-filtering.enabled = true (5)

scheme-allowed-countries = [

{

scheme: EPC, (5)

regulatory-date-timezone: "Europe/Berlin" (5)

allowed-countries: [

{country-code: "de", regulatory-date: "2026-09-17"}, (5)

{country-code: "fr"}

]

}

]

}

identity-resolution.comparison.netowl {

default {

http.client {

host = netowl-namematcher (6)

port = 8080

}

}

table {

http.client {

host = netowl-namematcher (6)

port = 8080

}

}

}Note the following key aspects:

| 1 | Set the MongoDB URL as appropriate for your environment |

| 2 | Set the Kafka URL as appropriate for your environment |

| 3 | Set the Name Matching thresholds required for your implementation |

| 4 | Set the Account Management hostname and port as appropriate for your environment |

| 5 | Set the Country Filtering and define which country codes are allowed for which scheme with optional regulatory date and time zone |

| 6 | Set the Identity Resolution Netowl hostname and port as appropriate for your environment |

Running

Docker Compose

The following docker compose is a VoP Responder service deployment that contains all the required infrastructure/applications (Kafka, MongoDB, Netowl, Account Management) needed to run the VoP Responder application. This sample deployment is running with Kafka for message logging and MongoDB for licensing.

| This represents a sample configuration and is not intended to represent performance or resiliency concerns which will be covered in another section in future releases. |

Docker Compose

services:

# Infrastructure

ipf-mongo:

image: ${docker.registry}/ipf-docker-mongodb:latest

container_name: ipf-mongo

ports:

- "27018:27017"

healthcheck:

test: echo 'db.runCommand("ping").ok' | mongo localhost:27017/test --quiet

kafka:

image: apache/kafka-native:4.0.0

container_name: kafka

ports:

- "9092:9092"

- "9093:9093"

environment:

- KAFKA_NODE_ID=1

- KAFKA_AUTO_CREATE_TOPICS_ENABLE=true

- KAFKA_CONTROLLER_QUORUM_VOTERS=1@localhost:9094

- KAFKA_CONTROLLER_LISTENER_NAMES=CONTROLLER

- KAFKA_PROCESS_ROLES=broker,controller

- KAFKA_LOG_RETENTION_MINUTES=10

- KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=1

- KAFKA_OFFSETS_TOPIC_NUM_PARTITIONS=1

- KAFKA_LISTENERS=PLAINTEXT://:9092,LOCAL://:9093,CONTROLLER://:9094

- KAFKA_LISTENER_SECURITY_PROTOCOL_MAP=LOCAL:PLAINTEXT,PLAINTEXT:PLAINTEXT,CONTROLLER:PLAINTEXT

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://kafka:9092,LOCAL://localhost:9093

- KAFKA_CREATE_TOPICS=MESSAGE_LOG:1:1,PROCESSING_ARCHIVE:1:1

# Apps

account-management-sim:

image: ${docker.registry}/account-management-simulator:${project.version}

container_name: account-management-sim

ports:

- "8082:8080"

- "55555:55555"

- "5006:5005"

volumes:

- ${docker-logs-directory}:/ipf/logs

- ./config/account-management-sim:/account-management-simulator/conf

user: "1000:1000"

healthcheck:

test: [ "CMD", "curl", "-f", "http://localhost:55555/statistics" ]

interval: 1s

timeout: 1s

retries: 90

netowl-namematcher:

image: ${docker.registry}/netowl-namematcher:4.9.5.2

container_name: netowl-namematcher

ports:

- "8081:8080"

volumes:

- ./config/netowl-namematcher:/var/local/netowl-data/

healthcheck:

test: [ "CMD", "curl", "-f", "http://localhost:8080/api/v2" ]

interval: 1s

timeout: 1s

retries: 90

vop-responder-app:

image: ${docker.registry}/verification-of-payee-responder-application:${project.version}

container_name: vop-responder-app

ports:

- "8080:8080"

- "5005:5005"

volumes:

- ${docker-logs-directory}:/ipf/logs

- ./config/vop-responder-app:/verification-of-payee-responder-application/conf

user: "1000:1000"

environment:

- IPF_JAVA_ARGS=-Dma.glasnost.orika.writeClassFiles=false -Dma.glasnost.orika.writeSourceFiles=false -Dconfig.override_with_env_vars=true

depends_on:

- ipf-mongo

- kafka

healthcheck:

test: [ "CMD", "curl", "http://localhost:8080/actuator/health" ]Note the following key aspects:

-

MongoDB and Kafka containers are third party images and not supplied by Icon Solutions

-

Account Management Simulator is added for illustration purposes and is not supplied by Icon Solutions but should be a customer implementation conforming to the Account Management API

-

The Netowl image above is a third party container not supplied by Icon Solutions and is currently being used by Identity Resolution Service, which is running embedded in the VoP Responder app. For more details on this, please review the Identity Resolution documentation. If a separate instance already exists in the customer environment, any customisations should be re-applied here if using a new image.

-

The VoP Responder app is created by Icon Solutions and hosted in our ipf-releases repository in Nexus.

Kubernetes

The following Kubernetes configuration is a VoP Responder service deployment that contains the recommended settings for deploying a VoP Responder application. This sample deployment is running with Kafka for message logging.

| This represents a sample configuration and is not intended to represent performance concerns which will be covered in another section in future releases. |

Configmap

apiVersion: v1

kind: ConfigMap

metadata:

name: responder-config

labels:

app: verification-of-payee-responder

product: ipfv2

data:

application.conf: |

ipf.mongodb.url = "mongodb://mongo:27017/vop"

server.port = 8081

identity-resolution.comparison.netowl {

default {

http.client {

host = localhost

port = 8080

}

}

table {

http.client {

host = localhost

port = 8080

}

}

}

ipf.verification-of-payee.responder {

name-match {

thresholds = [

{

processing-entity = "default",

scorings: [

{type: "default", lowerbound = 0.80, upperbound = 0.90}

]

}

]

}

account-management.http.client {

host = account-management

port = 8080

}

country-filtering.enabled = true

scheme-allowed-countries = [

{

scheme: EPC,

regulatory-date-timezone: "Europe/Berlin"

allowed-countries: [

{country-code: "de", regulatory-date: "2026-09-17"},

{country-code: "fr"}

]

}

]

}

common-kafka-client-settings {

bootstrap.servers = "kafka:9092"

}

akka {

kafka {

producer {

kafka-clients = ${common-kafka-client-settings}

}

consumer {

kafka-clients = ${common-kafka-client-settings}

kafka-clients.group.id = vop-responder-consumer-group

}

}

}

license.key: |-

{

"product":"NameMatcher",

"type":"Development",

"thread limit":1,

"*":{

"lifespan":[ "2022-01-01 00:00Z", "2026-01-01 00:00Z" ],

"features":[ "Latin" ]

},

"verification":<hidden>

}Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: verification-of-payee-responder-application

labels:

app: verification-of-payee-responder-application

product: ipfv2

spec:

replicas: 3

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 0

maxSurge: 1

selector:

matchLabels:

app: verification-of-payee-responder-application

product: ipfv2

template:

metadata:

labels:

app: verification-of-payee-responder-application

product: ipfv2

spec:

imagePullSecrets:

- name: registry-credentials

terminationGracePeriodSeconds: 10

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- verification-of-payee-responder-application

topologyKey: "kubernetes.io/hostname"

containers:

- name: verification-of-payee-responder-application

image: registry.ipf.iconsolutions.com/verification-of-payee-responder-application:latest

ports:

- containerPort: 8081

name: server-port

- containerPort: 5005

name: debug-port

env:

- name: "IPF_JAVA_ARGS"

value: "-Dma.glasnost.orika.writeClassFiles=false -Dma.glasnost.orika.writeSourceFiles=false"

livenessProbe:

httpGet:

path: /actuator/health

port: "server-port"

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 5

timeoutSeconds: 1

failureThreshold: 3

successThreshold: 1

readinessProbe:

httpGet:

path: /actuator/health

port: server-port

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 5

timeoutSeconds: 1

failureThreshold: 3

successThreshold: 1

startupProbe:

httpGet:

path: /actuator/health

port: server-port

scheme: HTTP

failureThreshold: 30

periodSeconds: 10

volumeMounts:

- name: application-config

mountPath: /verification-of-payee-responder-application/conf/application.conf

subPath: application.conf

- name: netowl-namematcher

image: registry.ipf.iconsolutions.com/netowl-namematcher:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

name: netowl-port

livenessProbe:

httpGet:

path: /api/v2/healthy

port: 8080

initialDelaySeconds: 15

timeoutSeconds: 2

periodSeconds: 5

volumeMounts:

- name: netowl-data

mountPath: /var/local/netowl-data

- name: application-config

mountPath: /var/local/netowl-data/license.key

subPath: license.key

volumes:

- name: application-config

configMap:

name: responder-config

- name: netowl-data

emptyDir: {}Service

apiVersion: v1

kind: Service

metadata:

name: verification-of-payee-responder-application

spec:

selector:

app: verification-of-payee-responder-application

ports:

- protocol: TCP

port: 8081

targetPort: 8081

name: server-portNote the following key aspects:

-

Infrastructure services like Kafka and MongoDB have not been provided in the above configuration and not supplied by Icon Solutions.

-

The Netowl image above is a third party container not supplied by Icon Solutions and is currently being used by Identity Resolution Service, which is running embedded in the VoP Responder app. For more details on this, please review the Identity Resolution documentation. If a separate instance already exists in the customer environment, any customisations should be re-applied here if using a new image.

-

The VoP Responder app is created by Icon Solutions and hosted in our ipf-releases repository in Nexus.

-

The number of replicas has been set to 3 for load balancing concerns

-

Liveness, Readiness and Startup probes have been configured to support node and pod stability, but these can be modified if required.

-

Pod Anti-Affinity has been configured to spread pods across multiple nodes to prevent a single point of failure.

Startup Errors

VoP Responder requires a database connection during application startup, regardless of the configured message logging type, due to IPF Licensing. If the application cannot connect to the database during startup, it will be marked as unhealthy. It will keep attempting to reconnect, and once a connection is successfully established, the application will transition to a healthy state. All connection failures and retry attempts will be logged to the application log at the WARN level.

When kafka is selected as the message logging type, the application will also attempt to connect to Kafka at startup. However, failure to connect to Kafka will be logged but will not affect the application’s health status by default. This is to prevent message logging from stopping VoP requests being processed.

If message logging is considered critical and Kafka connectivity issues should impact the application’s health and core functionality, you can enable this behavior by setting:

message.logger.send-connector.resiliency-settings.enabled = true

| If the database becomes unavailable after the VoP Responder has started, then the VoP Responder service will become unavailable. |