Migration Steps for IPF-2025.3.0

This page covers the migration steps required for IPF Release 2025.3.0

Migration of DSL Flows

The previously deprecated concept of a 'ResponseCodeLibrary' has now been removed. If your solution still contains one of these, you simply need to go to that concept and then press F6 and select the location you want to store the response code set.

It is recommended that you open your Flo solutions and click ok on the migration prompt. There is one migration in this release, though it is for UI improvements only so is not required.

A new custom operation capability has been added. For this a new "performOperation" method is available on the domain specification. While no changes are required for this, if for any reason you have created your own ModelOperations implementation (for example in tests), then you will need to add an implementation for this method.

Migrations for Scheme Packs

BDD integrity tests will now correctly fail if TechnicalReponse or ValidateSchemeRuleResponse messages have been sent but not asserted within your tests. If you have failed to assert for these messages your test run will fail in the afterStories section.

You will need to add appropriate assertions to your tests to ensure all messages are processed.

Examples assertions are:

Then Payment Service receives a 'Technical Response' with values:

| status | SUCCESS |Then the Payment Service receives a 'Validate Scheme Rules Response' with values:

| status | FAILURE |

| payload.content.reasonCode | FF01 |If you have already asserted for all messages no changes are necessary.

Migration for SEPA CT

The below system events have been moved to a different maven module and different package. If referencing the below system events you will need to add the new maven dependency and update the package.

| Name |

|---|

BulkCommandFailed |

ReceiveFromSchemeExtensionPointFailed |

SendingSchemeResponseFailed |

SendToSchemeExtensionPointFailed |

SendToSchemeExtensionPointSuccess |

Previous package: com.iconsolutions.ipf.sepact.core.events

New package: com.iconsolutions.ipf.payments.csm.sepa.common.events

Previous maven module:

<dependency>

<groupId>com.iconsolutions.ipf.payments.csm.sepact</groupId>

<artifactId>sepact-csm-events</artifactId>

</dependency>New maven module:

<dependency>

<groupId>com.iconsolutions.ipf.payments.csm.sepa.common</groupId>

<artifactId>sepa-common-events</artifactId>

</dependency>Migration for Connectors

Transport Message Processing Context headers

|

The changes below should only be required for the test code, as the presence of these headers is dependent on connector configuration. If used in production code then more careful consideration should be applied — both the old and the new headers may need to be used for Kafka/JMS, but ideally the context should be passed via the message payload instead. |

-

Deprecated

ProcessingContextheader keys have been removed. You should replace all occurrences of:-

com.iconsolutions.ipf.core.shared.domain.context.ProcessingContext.UOW_ID_HEADERwithcom.iconsolutions.ipf.core.shared.domain.context.propagation.ContextPropagationSettings.UNIT_OF_WORK_ID_CONTEXT_KEYkey -

com.iconsolutions.ipf.core.shared.domain.context.ProcessingContext.PROCESSING_ENTITY_HEADERwithcom.iconsolutions.ipf.core.shared.domain.context.propagation.ContextPropagationSettings.PROCESSING_ENTITY_CONTEXT_KEYkey -

com.iconsolutions.ipf.core.shared.domain.context.ProcessingContext.ASSOCIATION_ID_HEADERwithcom.iconsolutions.ipf.core.shared.domain.context.propagation.ContextPropagationSettings.ASSOCIATION_ID_CONTEXT_KEYkey -

com.iconsolutions.ipf.core.shared.domain.context.ProcessingContext.REQUEST_ID_HEADERwithcom.iconsolutions.ipf.core.shared.domain.context.propagation.ContextPropagationSettings.CLIENT_REQUEST_ID_CONTEXT_KEYkey

-

-

MessageHeaders.fromProcessingContext()method has been removed, you should useProcessingContextUtils.toMap()instead:MessageHeaders headers = new MessageHeaders(ProcessingContextUtils.toMap(processingContext)); -

MessageHeaders.toProcessingContext()method has been removed, you should useProcessingContextUtils.fromMap()instead:ProcessingContext processingContext = ProcessingContextUtils.fromMap(messageHeaders.getHeaderMap()); -

ProcessingContextUtils.ProcessingContextHeaderExtractorhas been moved to a standalone class:com.iconsolutions.ipf.core.connector.common.ProcessingContextHeaderExtractor. Replacereturn t -> new ProcessingContextUtils.ProcessingContextHeaderExtractor().extract(t)with

return new ProcessingContextHeaderExtractor()

Correlation index creation

-

Rename any usage of

ipf.correlation.index-creation.enabledconfig property toipf.connector.correlation.create-indexes. -

Rename any usage of

ipf.connector.correlation-timestamp-field-nameconfig property toipf.connector.correlation.timestamp-field-name. -

Rename any usage of

ipf.connector.correlation-expiryconfig property toipf.connector.correlation.time-to-live.

Migration for SEPA DD

Currently, clients depending on SEPA DD are still in development/test so the recommended approach for migrating to this version is to deploy with a new journal database.

CSM Client

A number of configuration items have been renamed to allow consolidation of topics and queues from being per message type/direction/role to just direction and role:

| Old config item | New config item |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

CSM Service

A number of configuration items have been renamed to allow consolidation of topics and queues from being per message type/direction/role to just direction and role:

| Old config item | New config item |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Migration for JAXB

This release of IPF removes support for the javax.xml.bind API (JAXB) in favour of the jakarta.xml.bind API (Jakarta

XML Binding).

You may be able to continue using JAXB, but it is recommended to upgrade to the new Jakarta XML Binding API to ensure forward compatibility with IPF and Jakarta EE, since JAXB was deprecated in Java 9.

Step 1: Replace Dependencies and Plugins

Replace your Maven dependencies and plugins from JAXB API and JAXB Implementation to Jakarta, using to the following table:

| Old dependency | New dependency | Note |

|---|---|---|

|

|

This is the JAXB Maven Plugin |

|

|

This is usually a JAXB Maven Plugin dependency |

|

|

This is usually a JAXB Maven Plugin dependency |

|

|

Step 2: Change Package Names

Replace all occurrences of javax.xml.bind with jakarta.xml.bind. The API has not changed so no further code changes

should be necessary

Step 3: Change Binding File

The schemaBindings file used for customisation of schema compilation

has to be updated for Jakarta bindings.

First, change the version attribute from 2.1 to 3.0.

The following namespaces have to change:

| Old namespace | New namespace |

|---|---|

|

|

|

|

|

|

Migration for MPS Build process

Within MPS, we have introduced the ability for both the Dot to SVG process and the IPF conf merge process to be done as part of the internal MPS Make process, instead of requiring dedicated Maven plugins.

This has many benefits:

-

It simplifies the build process and helps decouple the IPF build process from Maven where appropriate.

-

It prevents usability issues related to maven-specific transitive folder structures in the target directory being removed when running Flow-related testing within an IDE. This would previously manifest as an error relating to Akka roles not being found when test is being executed in an IDE, this error would previously persist even if the "clear generated-sources" IDE setting was correctly DESELECTED"

As part of the current release (2025.3.0), this change of approach is opt-in, it is not automatic, but we recommend migrating now. We will gradually change the default behaviour in the future, deprecate the maven plugins and eventually this new approach will be the only supported mechanism.

The processes for selecting to include these processes as part of the generate is configured independently for the MPS IDE and for when running on the command line.

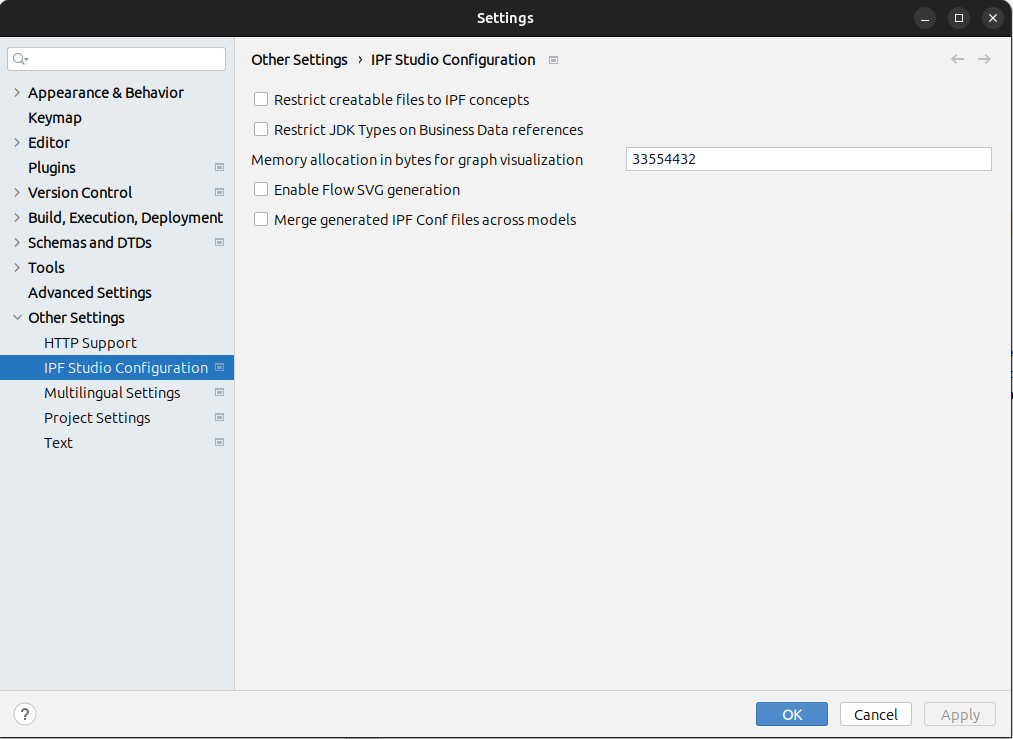

Enabling within IDE

Within the IDE, to enable these features, see the two new options in the configuration pane

Enable Flow SVG generation - This enables the production of SVG images of the Flow graphs as part of generation.

Merge generated IPF Conf files across models - This enables the merging of ipf.conf files into single-file per solution as part of the generation process.

Enabling within Maven

To enable these features as part of maven build you need to do the following.

-

Add a maven property

-

Update a build script if the project uses MPS Build scripts (no change needed if the project uses the icon-mps-runner plugin instead of buildscripts)

-

Chose different tiles within the mps and domain modules.

Maven property

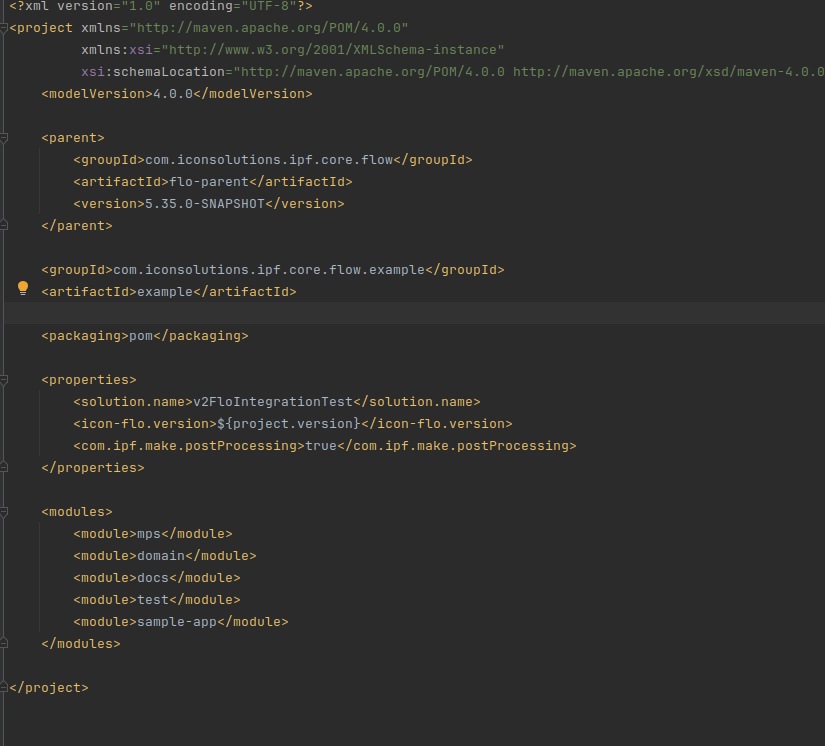

Add the following property to the domain-root pom

<com.ipf.make.postProcessing>true</com.ipf.make.postProcessing>The below shows an example of this property in the context of the expected domain-root pom.

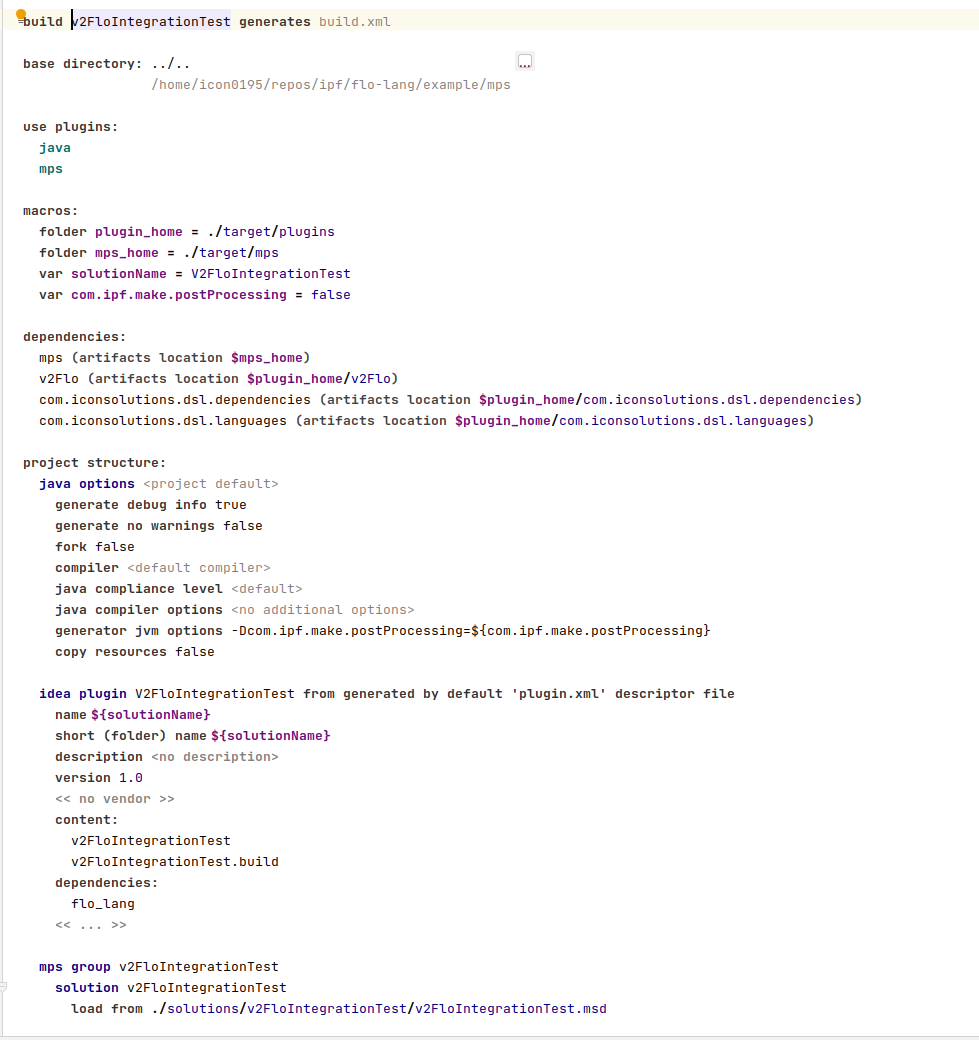

Update BuildScript

If your MPS project uses BuildScripts, we need to add a change there to propagate this property into the underlying generation process.

This is two changes

-

Adding com.ipf.make.postProcessing as a var

-

adding -Dcom.ipf.make.postProcessing=${com.ipf.make.postProcessing} as a generator jvm arg

E.g.

Alternate Tiles

Now that these changes have been added, we need to select different tiles in the mps and domain modules so that we DON’T apply the plugins that originally handled these features.

MPS

Within the mps directory:

If you use:

com.iconsolutions.ipf.core.flow:flo-mps-plugin-tile:@project.version@Now instead just use:

com.iconsolutions.ipf.core.flow:flo-mps-plugin-base-tile:@project.version@If you use:

com.iconsolutions.ipf.core.flow:flo-mps-tile:@project.version@Now instead use:

com.iconsolutions.ipf.core.flow:flo-mps-base-tile:@project.version@Migration for Persistent Scheduler

Migrating FailureCommands to SchedulingHelper.lateExecute

| The following steps only apply to job specifications that define a failure command and a failure identifier. If no such job specifications exist in the system, the migration can be skipped. |

Before applying the migration steps, please read through the latest persistent scheduler docs on this topic.

Given the following job:

var job = JobSpecificationDto.builder()

.jobRequestor("invoice-service")

.singleSchedule(LocalDateTime.now().plusSeconds(30)) // desired execution

.zoneId(ZoneId.of("Europe/Madrid"))

.triggerIdentifier("order-123")

.triggerCommand(new RunInvoiceCommand())

.lateExecutionThreshold(Duration.ofSeconds(10)) // after desired+10s we treat it as late

.failureIdentifier("order-123")

.failureCommand(new RunInvoiceCommandFailed())

.build();

schedulingModule.scheduleJob(job);and its SchedulingHelpers:

public class BillingHelper implements SchedulingHelper {

@Override

public boolean supports(SchedulingCommand cmd) {

return cmd instanceof RunInvoiceCommand;

}

// timely execution

@Override

public CompletionStage<Void> execute(String id, SchedulingCommand cmd) {

return CompletableFuture.runAsync(() -> doWork(id, cmd));

}

}

// Handles late execution

public class BillingFailedHelper implements SchedulingHelper {

@Override

public boolean supports(SchedulingCommand cmd) {

return cmd instanceof RunInvoiceCommandFailed;

}

// late execution, triggered if execution happens after desired+10s

@Override

public CompletionStage<Void> execute(String id, SchedulingCommand cmd) {

// business logic located in handleLateExecution

return CompletableFuture.runAsync(() -> handleLateExecution(id, cmd));

}

}The business logic located within BillingFailedHelper.execute now needs to be moved to BillingdHelper.lateExecute, with BillingFailedHelper now becoming dedicated to handling actual execution failures:

public class BillingHelper implements SchedulingHelper {

@Override

public boolean supports(SchedulingCommand cmd) {

return cmd instanceof RunInvoiceCommand;

}

// timely execution

@Override

public CompletionStage<Void> execute(String id, SchedulingCommand cmd) {

return CompletableFuture.runAsync(() -> doWork(id, cmd));

}

// late execution, triggered if execution happens after desired+10s

@Override

public CompletionStage<Void> lateExecute(String id, SchedulingCommand cmd, Duration overBy) {

log.warn("Job {} is late by {}", id, overBy);

return CompletableFuture.runAsync(() -> handleLateExecution(id, cmd)); }

}

// Can be omitted if no failure handling is required/desired

public class BillingFailedHelper implements SchedulingHelper {

@Override

public boolean supports(SchedulingCommand cmd) {

return cmd instanceof RunInvoiceCommandFailed;

}

// triggers if executions result in failure

// contains brand new business logic

@Override

public CompletionStage<Void> execute(String id, SchedulingCommand cmd) {

return CompletableFuture.runAsync(() -> handleFailure(id, cmd));

}

}Removal of FailedJobActor

-

The removal of

FailedJobActorand the associated code, as part of PAY-15068 means there may be existingJobSpecificationentries (in mongoDB) which are no longer executable. This will cause errors when JobRehydration is attempted or the persistent scheduler attempts to execute the job. These documents, if present, will need to be cleaned up on any IPF application using the IPF persistent scheduler.

db.jobSpecification.remove(

{"_id.jobSpecificationId" : "process-failed-jobs-scheduler"},

{justOne: true}

)Migration for Payment Entries Processor (formerly Payment Releaser)

HTTP implementation

This section explains how to update systems that communicate with the Payments Entries Processor via HTTP.

Using SupportingContext in Request Body

If you are currently supplying SupportingContext in your HTTP request body to the POST /api/v1/payment-releaser/instruction/{instructionUnitOfWorkID}/release and/or POST /api/v1/payment-releaser/transaction/{transactionUnitOfWorkId}/release HTTP endpoints, you may need to make changes as explained below.

If you are using the provided HTTP Client Connectors to connect to the Payment Entries Processor, you do not need to make any changes.

If you are not using the provided HTTP Client Connectors, you will need to update the request body in your request from:

{

"headers": {

"fieldA": "value-for-field-A",

"fieldB": "value-for-field-B"

},

"metaData": {

"fieldC": "value-for-field-C",

"fieldD": "value-for-field-D"

}

}to:

{

"supportingData": {

"headers": {

"fieldA": "value-for-field-A",

"fieldB": "value-for-field-B"

},

"metaData": {

"fieldC": "value-for-field-C",

"fieldD": "value-for-field-D"

}

}

}As the HTTP contract contains a breaking change, your upgrade of the Payment Entries Processor will require downtime as well as downtime of the calling systems. The order of upgrade would be as follows:

-

Bring down calling system(s)

-

Bring down Payment Entries Processor system

-

Upgrade the Payment Entries Processor system

-

Bring up the calling system(s) (the calling system(s) may or may not be upgraded, depending on the HTTP client used, as mentioned previously in this section).

Embedded implementation

The entrypoint of the Payment Entries Processor is the PaymentEntriesProcessor java interface.

There is no requirement to update how your system calls the PaymentEntriesProcessor java interface methods.

If you are using the return object of the processInstruction method, then you will have to update your system to use the updated return object.

The return object is (and remains) CompletionStage<ExecutionInfo>.

However, the contents of the ExectionInfo has been refactored causing a breaking change.

After you have extracted the ExecutionInfo from the CompletionStage, then you will have to make changes to access the supportingData and actionType fields.

-

Before upgrade:

public void extract(ExecutionInfo executionInfo) {

SupportingContext supportingData = executionInfo.getSupportingData();

ProcessingActionType actionType = executionInfo.getActionType();

}

-

After upgrade:

public void extract(ExecutionInfo executionInfo) {

SupportingContext supportingData = executionInfo.getPaymentHandlingInfo().getSupportingData();

ProcessingActionType actionType = executionInfo.getPaymentHandlingInfo().getActionType();

}

Migrations for CharacterReplacer

Breaking change for removed overloaded replaceCharacters method

We removed the following overloaded method from the interface that allowed for character replacement for only part of a string.

String replaceCharacters(String text, Optional<String> startFrom, Optional<String> endAfter);If you are using it in your code, the following migration code should help maintain identical functionality.

Specifying only startFrom

var resultingXml = characterReplacer.replaceCharacters(xml, Optional.of("<startTag>"), Optional.empty());to

var beforeReplacement = xml.substring(0, xml.indexOf("<startTag>"));

var stringToReplace = xml.substring(xml.indexOf("<startTag>"));

var resultingXml = beforeReplacement + characterReplacer.replaceCharacters(stringToReplace);Specifying only endAfter

var resultingXml = characterReplacer.replaceCharacters(xml, Optional.empty(), Optional.of("</EndTag>"));to

int endIndex = xml.indexOf("</EndTag>") + "</EndTag>".length();

var stringToReplace = xml.substring(0, endIndex);

var afterReplacement = xml.substring(endIndex);

var resultingXml = characterReplacer.replaceCharacters(stringToReplace) + afterReplacement;Specifying both startFrom and endAfter

var resultingXml = characterReplacer.replaceCharacters(xml, Optional.of("<middleTag>"), Optional.of("</middleTag>"));to

var beforeReplacement = xml.substring(0, xml.indexOf("<middleTag>"));

int endIndex = xml.indexOf("</middleTag>") + "</middleTag>".length();

var stringToReplace = xml.substring(xml.indexOf("<middleTag>"), endIndex);

var afterReplacement = xml.substring(endIndex);

var resultingXml = beforeReplacement + characterReplacer.replaceCharacters(stringToReplace) + afterReplacement;Deprecated configuration to current configuration migration

Deprecated

character-replacements {

char-to-char-replacements = [

{character = À, replaceWith = A},

{character = ï, replaceWith = i, replaceInDomOnly = true},

]

list-to-char-replacements = [

{list = [È, É, Ê, Ë], replaceWith = E}

]

regex-to-char-replacements = [

{regex = "[\\p{InLatin-1Supplement}]", replaceWith = ., replaceInDomOnly = true}

]

}Migrated

character-replacements {

custom-replacer { (1)

enabled = true (2)

char-to-char-replacements = [

{character = À, replaceWith = A},

{character = ï, replaceWith = i, replaceInDomOnly = true},

]

list-to-char-replacements = [

{list = [È, É, Ê, Ë], replaceWith = E}

]

regex-to-char-replacements = [

{regex = "[\\p{InLatin-1Supplement}]", replaceWith = ., replaceInDomOnly = true}

]

}

}-

The

*-to-char-replacementsproperties are now nested undercustom-replacer -

enabledflag must be set totruefor the configuration to load

Migration for services using the Human Task Manager

As mentioned in the HTM release notes, a change has been made to the headers included on the task closed notifications sent by the service.

While the consumers have been kept backward-compatible, the change does have an impact on the deployment order of orchestration services that make use of the Human Task Manager. Every service that registers HTM tasks and receives task notifications from HTM will have to perform an upgrade deployment before HTM itself is upgraded.

Failure to do so may result in task notifications being ignored by said orchestration services.

Migrations for TIPS CSM character replacement

To preserve the legacy behaviour of character replacement, you must provide the following config in your application.

character-replacements {

lookup-table-replacer = null (1)

custom-replacer { (2)

enabled = true

regex-to-char-replacements = [

{regex = "[^a-zA-Z0-9\\/\\-\\?:().,'+\\s]", replaceWith = ., replaceInDomOnly = true}

]

}

}-

This removes the SEPA conversion table configuration

-

This reinstates the behaviour from IPF release 2025.2.0

Migration for client implementations of the Payment Notification Service

As mentioned in the aom release notes, client implementations may require an update to absorb changes in the following notification service domain classes:

-

PdsObjectContainer-

pdsfield type changed fromObjecttoJsonNode. -

The structure of the

pdsfield can be inferred using thetypeNameandversionfields. ThetypeNameis mapped from the of the original PDS object’s Java class name.

-

-

CreditTransferInitiationToPaymentStatusReportMapper-

The

cctiparameter has changed fromCustomerCreditTransferInitiationV09toJsonNode-

An instance of

CustomerCreditTransferInitiationV09can be converted to aJsonNodeusingSerializationHelper.objectMapper().valueToTree(ccti).

-

-

The return type changed from

CustomerPaymentStatusReportV10toJsonNode-

The returned

JsonNodeinstance remains in thepain.002.001.10message structure. It can be converted to an instance ofCustomerPaymentStatusReportV10usingSerializationHelper.objectMapper().convertValue(pain002JsonNode, CustomerPaymentStatusReportV10.class)

-

-